I was recently blown away by Lean Rada’s excellent blog post on CSS-only blurry image placeholders (seriously, it’s some very creative work!) and I wanted to try generating image placeholders for a Cloudflare Workers app that I’m working on.

If you’re in a hurry, the code can be found in this GitHub repo along with a little demo app.

A lot of the existing docs on generating these image placeholders rely on modules like Sharp which don’t run on Cloudflare Workers. You could also compile a native module into WASM and use that, but that’s quite a lot of work to sort out compilers and config!

Luckily you can ditch all of these and just leverage the platform’s Image bindings directly.

The Image bindings let you transform, resize, filter, rotate, add watermarks, etc.

const response = (

await env.IMAGES.input(stream)

.transform({ rotate: 90 })

.transform({ width: 128 })

.transform({ blur: 20 })

.output({ format: "image/png" })

).response();There were two things missing from the docs [1] that I discovered while looking at the type definitions that help us out here:

- You can request an array of

rgborrgbapixel values from the Workers binding directly. No need to write code to parse an image file format directly - There is a “fit” for resizing images that ignores the existing aspect ratio called “squeeze” that is currently missing from the docs

Another issue was an inability to get the resized image dimensions from the output. When requesting an rgb output you

receive an array with a single dimension with 3 values per pixel. Unlike a photo format this does not encode any information about the width

or height of the image. I work around this by calculating the expected dimensions from the original’s dimensions.

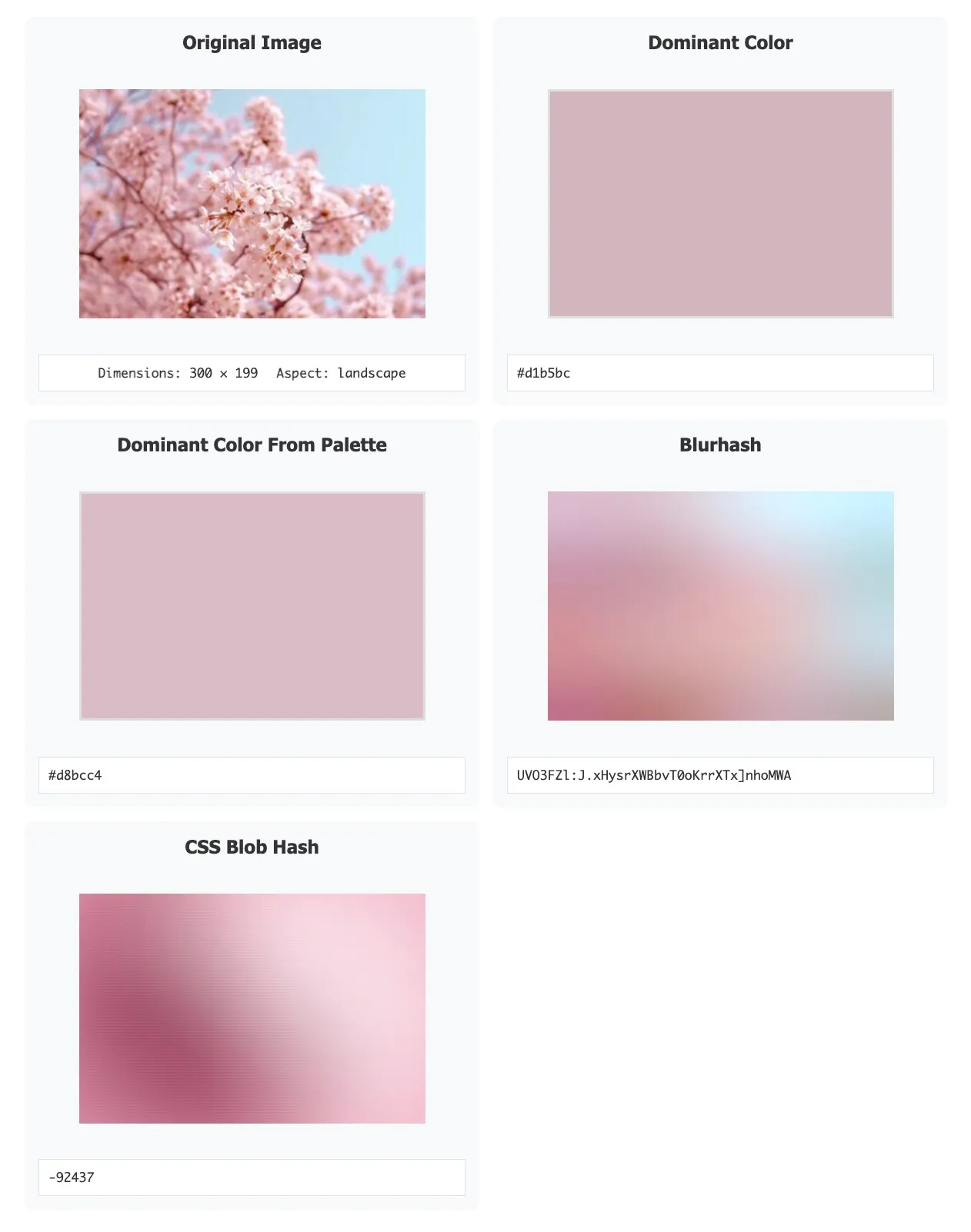

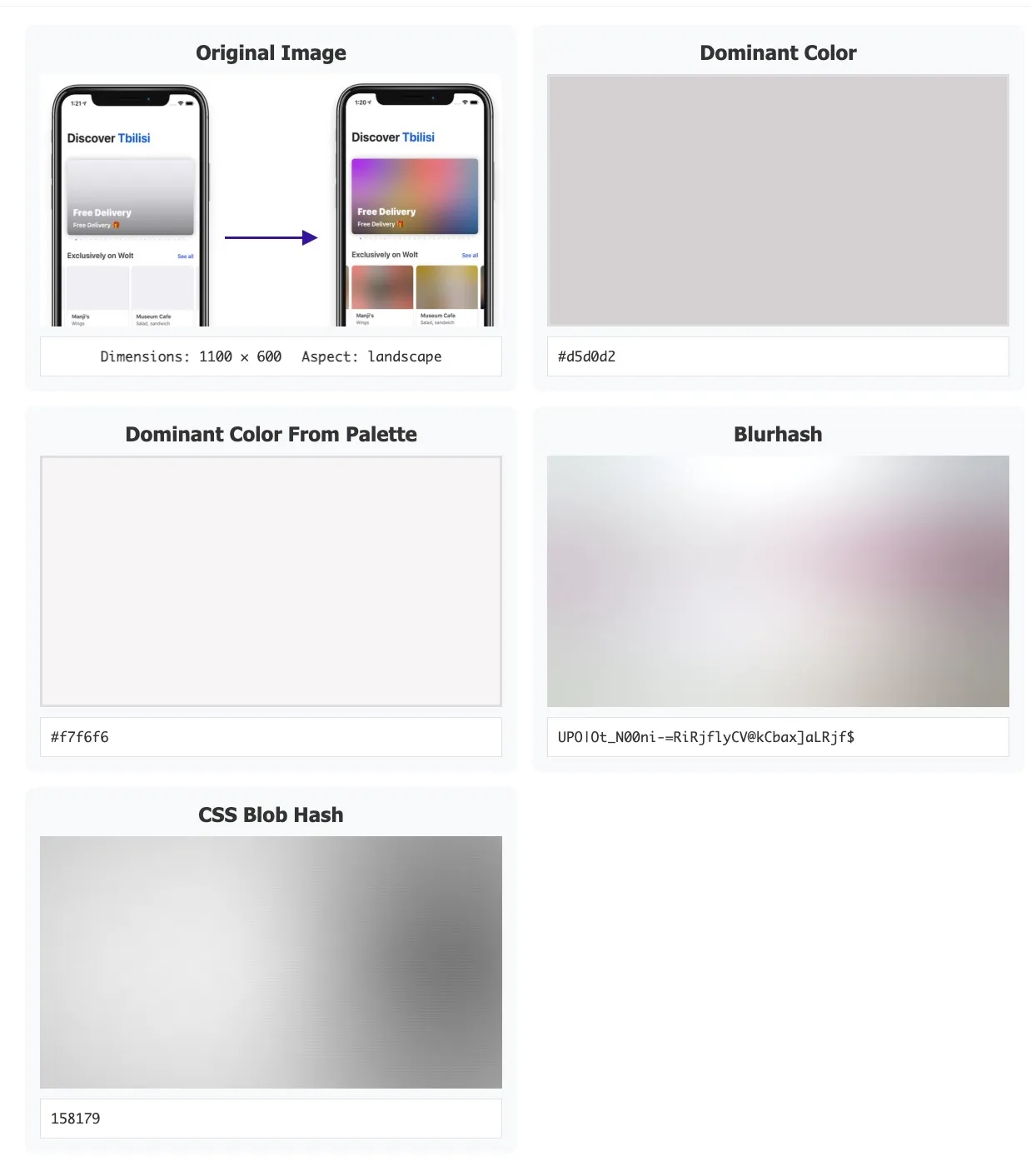

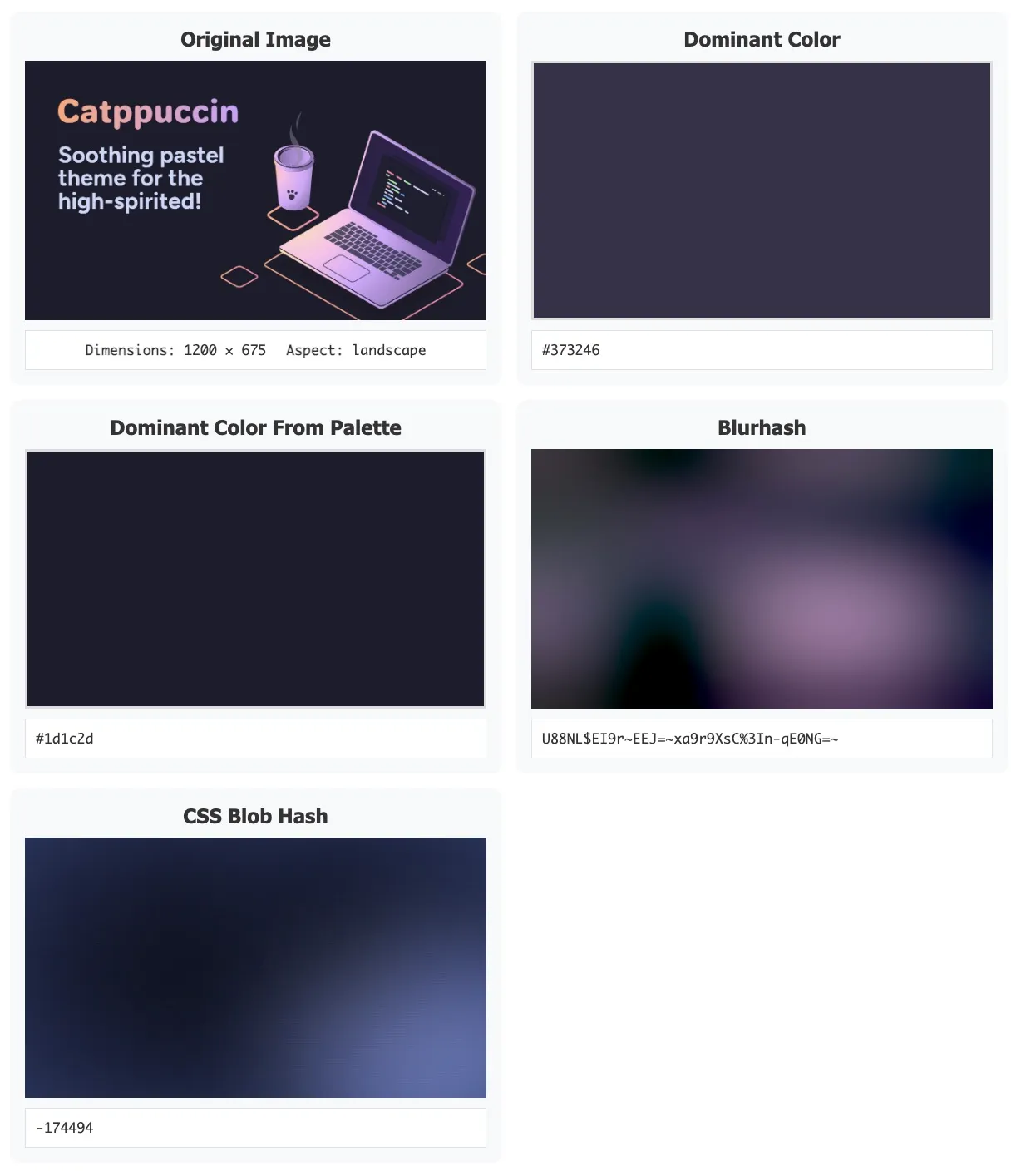

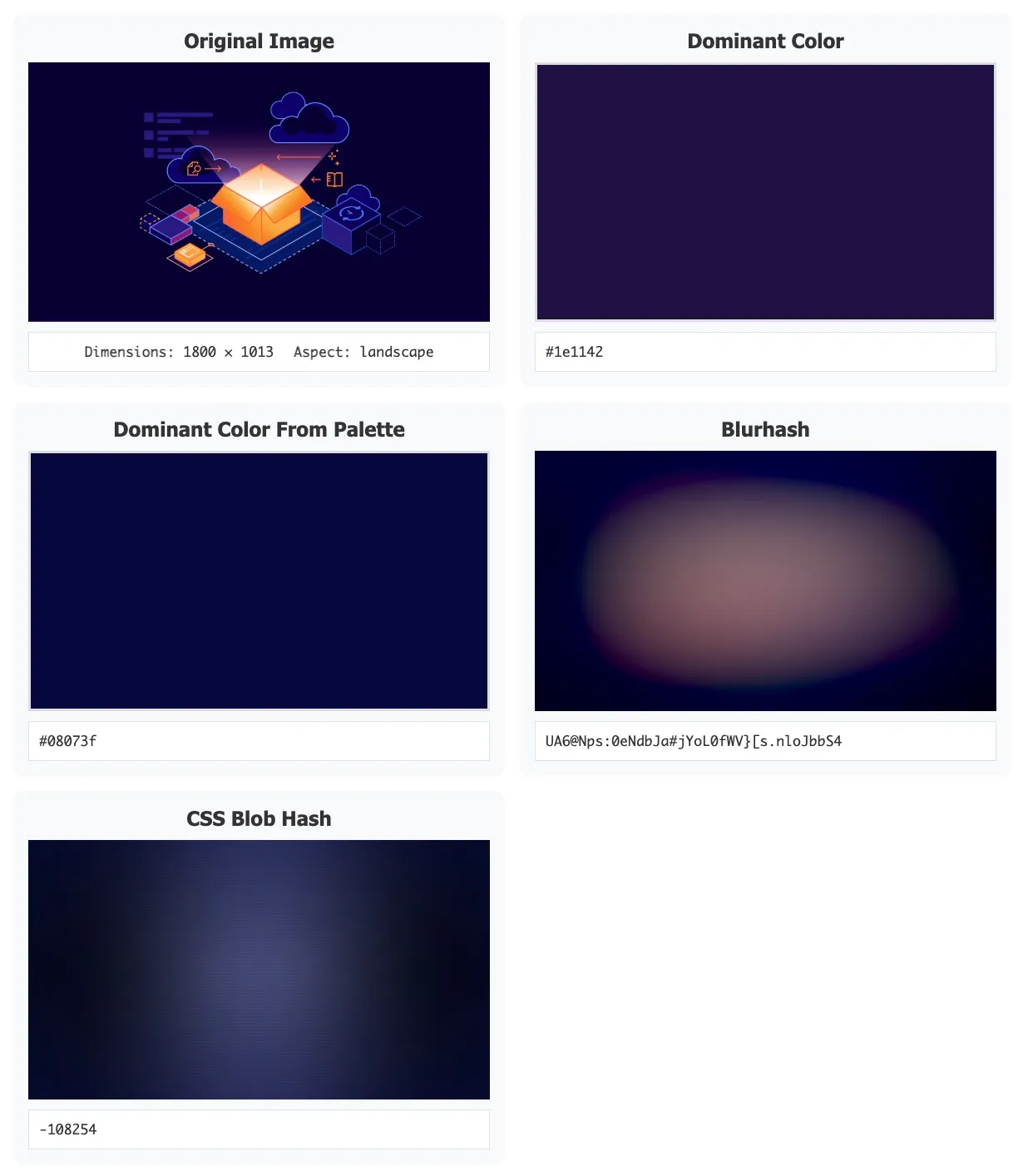

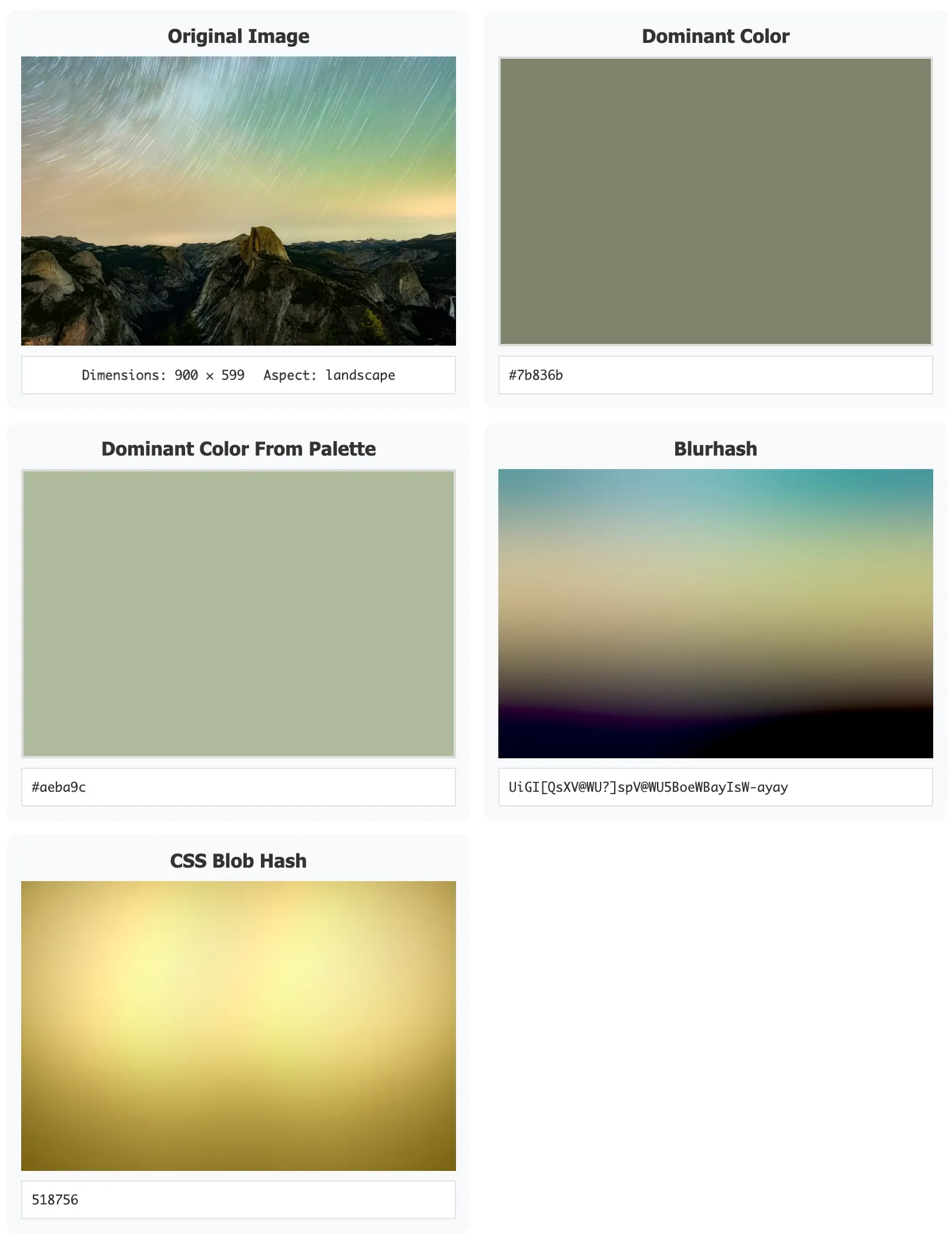

I implemented 4 different image placeholder algorithms:

Dominant Color (easy)

The simplest approach I could think of was to squish the whole image down into one pixel, and let the image software decide what the color should be. This works surprisingly well given the lack of sophistication! The whole implementation fits in just a few lines:

async function getDominantColor(image: ReadableStream): Promise<string> {

let rgbImage = await env.IMAGES.input(image)

.transform({ width: 1, height: 1, fit: "cover" })

.output({ format: "rgb" });

let rgbImageBuffer = await rgbImage.response().arrayBuffer();

let pixelData = new Uint8Array(rgbImageBuffer);

let r = pixelData[0];

let g = pixelData[1];

let b = pixelData[2];

return `#${r.toString(16).padStart(2, "0")}${g.toString(16).padStart(2, "0")}${b.toString(16).padStart(2, "0")}`;

}Dominant Color from a Palette

Averaging all of the colors together sometimes creates a muddy looking output. This resizes the image to be easy to work with in the workers environment and uses the underlying Modified Median Cut Quantization (MMCQ) algorithm from the color-thief library to group colors together. If the image has a lot of a single color, such as text on a white background, it will tend to pick out this main color instead of averaging it with the rest of the colors in the image.

async function getDominantColorFromPalette(image: ReadableStream): Promise<string> {

let rbgImage = await env.IMAGES.input(image)

.transform({ width: 200, height: 200, fit: "cover" })

.output({ format: "rgb" });

let rgbImageBuffer = await rbgImage.response().arrayBuffer();

let pixelData = new Uint8Array(rgbImageBuffer);

// get a representative color palette from the image

let palette = quantize(pixelData, 5);

// get the most prominent color

let dominantColor = palette[0];

let r = dominantColor[0];

let g = dominantColor[1];

let b = dominantColor[2];

return `#${r.toString(16).padStart(2, "0")}${g.toString(16).padStart(2, "0")}${b.toString(16).padStart(2, "0")}`;

}Blurhash

https://blurha.sh/ is a clever approach that compresses an image into blurry gradients and packs that data into a small string like LEHV6nWB2yk8pyo0adR*.7kCMdnj. The TypeScript library works great on Workers,

though note that it expects an rgba array as input.

I did have to jump through some hoops to get the correct dimensions of the new image. See the full code here.

async function getBlurhash(image: ReadableStream, aspectRatioInfo: AspectRatioInfo): Promise<string> {

let resizedImage = await env.IMAGES.input(image)

.transform({ width: RESIZE_DIMENSION, height: RESIZE_DIMENSION, fit: "contain" })

.output({ format: "rgba" });

let resizedImageBuffer = await resizedImage.response().arrayBuffer();

let pixelDataClamped = new Uint8ClampedArray(resizedImageBuffer);

let { width: resizedWidth, height: resizedHeight } = getResizedDimensions(

aspectRatioInfo,

RESIZE_DIMENSION,

pixelDataClamped.length / 4

);

return encodeBlurhash(pixelDataClamped, resizedWidth, resizedHeight, 4, 4);

}CSS Blobhash

I cribbed from Lean’s implementation and lightly converted it for the Images binding. See the full implementation here.

async function getCSSBlobHash(image: ReadableStream): Promise<number> {

let resizedImage = await env.IMAGES.input(image)

.transform({ width: 3, height: 2, fit: "squeeze" })

.output({ format: "rgb" });

let resizedImageBuffer = await resizedImage.response().arrayBuffer();

let pixelDataClamped = new Uint8ClampedArray(resizedImageBuffer);

return encodeCSSBlobHash(pixelDataClamped);

}Results

Playing around with the output I was quite surprised to find that picking out the dominant color from a palette produced what I thought was consistently the best balance. The blurry approaches are often very pleasing for photographs, but my use-case will have a lot of images designed for showing up in a social media feed, and these feel a little off when blurred. Sometimes keeping it simple is the best approach.

I’ll close out with some screenshots of examples. Feel free to play with the generator with your own images: https://github.com/jmorrell/low-quality-image-placeholders-on-cloudflare-workers

[1] I’ve filed tickets internally to get these issues fixed